Helping self-driving cars become reliable chauffeurs

Why robustness is key for artificial intelligence in vehicle software.

Driving a car in foggy conditions can be challenging. You have to reduce your speed, turn on the rear fog lights, and stay alert for road markings and objects in the misty distance. This situation on the road is – clearly – not optimal but, all things considered, you can still drive pretty well. In other words, you can still perform under certain limitations, or ‘perturbations’. The same goes for our vehicle software algorithms, where the functional stability of a system under perturbation is described as robustness.

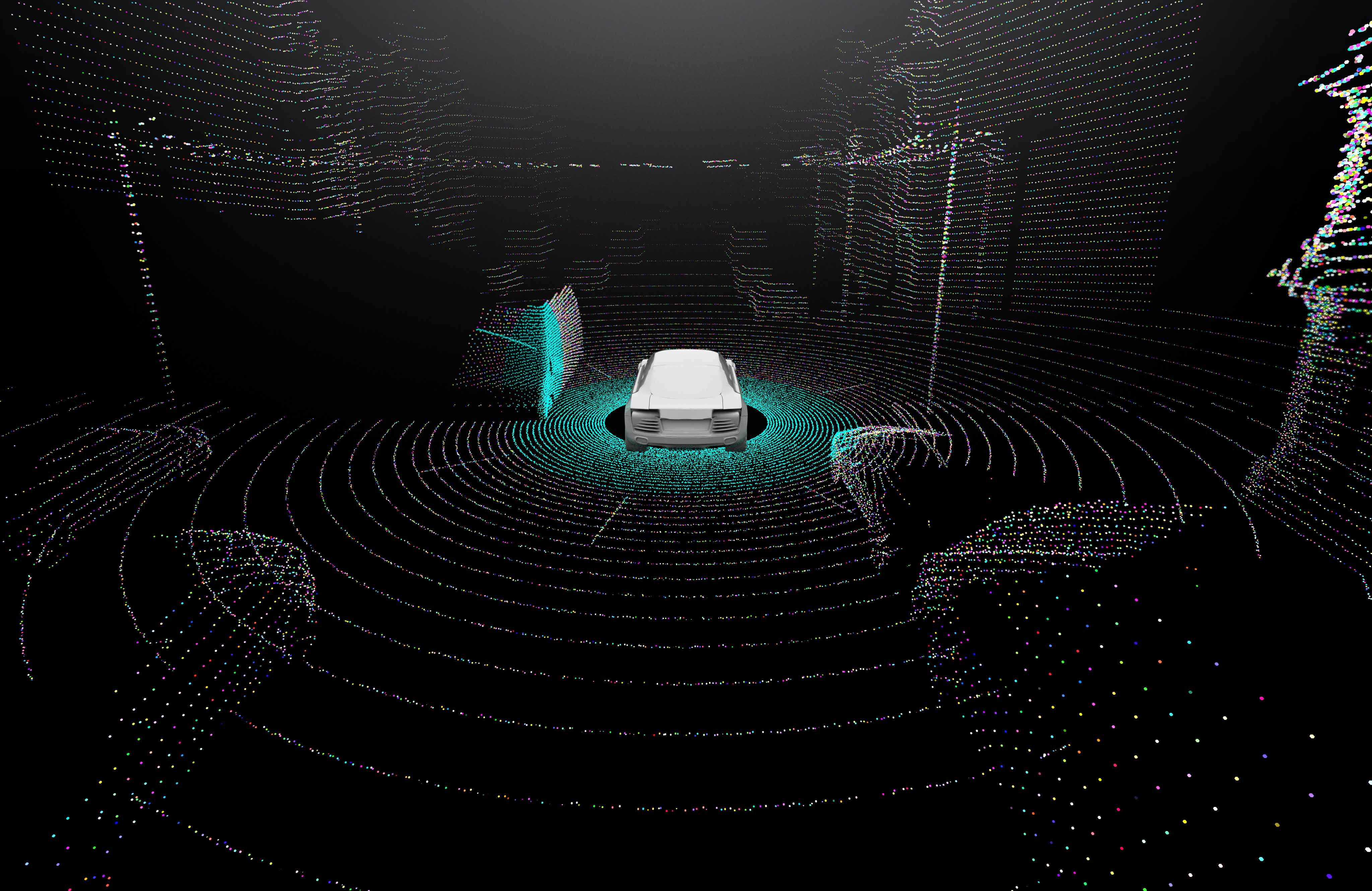

Now let’s take that idea of robustness into the world of automated driving. Even with a little bit of dirt on the camera lens, or even if a sensor isn’t quite mounted straight, a fully self-driving car will still have to drive correctly and safely. And no matter what color the other cars are on the road or what clothes pedestrians are wearing, the system shouldn’t have any trouble identifying them.

While this doesn’t present a big challenge to human drivers, it’s quite a challenge for vehicle software that relies on artificial intelligence (AI) to make certain decisions. These vehicle AI modules will have to train and improve their robustness to such a point where perturbations do not cause them to make an incorrect – and potentially dangerous – decision.

In its current state, even the most advanced AI systems still struggle here. Minor changes of input data (which is then processed by an AI module), can be enough to confuse the system and cause it to come to misleading conclusions. That minor change might just be a difference in a couple of pixels in an image. Building reliable and secure machine-learning algorithms is therefore an important area of scientific research, not only for self-driving vehicles, but across all industries.

Testing classification

The reason that algorithms can come to misleading conclusions is due to ‘classification’, an aspect of supervised learning techniques for artificial intelligence. Imagine you have a group of pictures that are all similar to each other, but nevertheless unique. Perhaps some pictures are of dogs and others are of cats. They’re all mammals that walk on four legs and have a head and a tail, but they’re still different. The differentiation between those two animals is referred to as a classification boundary. If you slightly modify one of the pictures of a cat so that it looks more like a dog, then AI recognition will cross that boundary and classify it as a dog.

To test classification and train robustness of an AI module, you can purposefully disrupt an image. For example, you can rotate it, color it in a different way, or simulate weather conditions. Training AI in this way allows it to consistently make the right decisions.

Context is key

But there are many more factors to be considered when ensuring robustness for self-driving vehicle AI. Consider time, for example: if a vehicle AI system doesn’t recognize a pedestrian within 5 milliseconds, then this might not be a problem. The system might not have to recognize them quite that quickly. But if it can’t recognize a pedestrian in 10 seconds, then this certainly is a problem. Time isn’t the only factor, though. The constellation of events, and its effect on correct labelling by the AI module, is also important. A system might be better at identifying a pedestrian walking across the road than it is at identifying a cyclist riding behind a car.

When determining robustness, it’s crucial that it is considered in the context of the overall safety of an overall function. Safety is not an abstract concept; it’s always a system property. On their own, neural networks and perception functions are never safe or unsafe because any errors they contain do not pose an immediate risk to the vehicle’s environment or occupants.

A key part of the safety process is the analysis and evaluation of performance limitations, i.e. possible sources of errors and their effects. This is the point where the robustness of neural networks is of great importance. The requirements for the degree of robustness are then based on the context – including the environment and driving situation – and on the safety goals in order to keep the residual risk of the overall function as small as possible. The aim is to maximize the number of conditions a self-driving vehicle software can handle with a high degree of robustness.

With all of these considerations, you can imagine that training a vehicle AI module is an extremely long and complex process. But it’s a necessary prerequisite to help self-driving vehicles become robust. Only in this way can they become our reliable chauffeurs of the future.