How to reach higher automation levels with AI-based sensor fusion

To bring higher levels of automated driving and safety to the road, vehicles need to be able to effectively perceive their environment and process vast amounts of data. Cristina Rico, Head of Sensor Fusion at CARIAD, explains the need for a transition from object-level to AI-based sensor fusion.

Sensor fusion combines data from sensors to create an environment model that supports automated driving functions.

Sensor fusion combines data from sensors to create an environment model that supports automated driving functions.

ADAS/AD is a key development area for us at CARIAD. We’re currently working on projects to bring Level 4 automated driving to vehicles on the road for use in individual mobility. By introducing new driving functions, we’ll greatly improve safety. At the same time, all of us will gain so much more quality time in their cars, allowing us to read a book, enjoy a movie or take part in a business call, all while the car drives itself on the highway.

However, in order to achieve high-levels of automated – and with it, this greater safety, comfort and convenience – we need to successfully transition from classic, object-based sensor fusion to the more advanced approach of AI-based sensor fusion.

What is sensor fusion?

First of all, before explaining the advantages of AI-based sensor fusion that we’re working on at CARIAD, it’s important to understand the overarching topic of sensor fusion. Sensor fusion brings together information from various different sensors and generates the first step of the so-called environment model. This model provides a comprehensive view of the vehicle’s surroundings. The algorithms used in sensor fusion should ensure both spatial and temporal consistency.

To power sensor fusion, we need inputs. These inputs are typically sensors, such as cameras, lidar, radar and ultrasonic sensors, but can also include information from the car-to-car communication system.

Following sensor fusion, the output is the environment model, which can be divided into different sub-products:

1. The static environment model describes all static raised objects, such as walls or parked vehicles.

2. The dynamic environment model describes all potentially moving objects, such as pedestrians and cars.

3. The drivable areas include empty space and areas to overtake.

4. The regulatory environment model describes all the elements that have legal relevance, such as lane markings and traffic signs.

This environment model can then be used by the car to power automated driving functions.

An important side note is that sensor fusion is a rapidly and radically evolving topic. It’s not yet state-of-the-art, nor is there a single, accepted benchmark solution. There are various different methods and approaches. At the same time, this is precisely what makes sensor fusion such an exciting discipline to work on.

Information from various kinds of sensors is fused together to produce an environment model.

Information from various kinds of sensors is fused together to produce an environment model.

What is object-level sensor fusion?

Now, let’s compare two major approaches of sensor fusion, beginning with object-level sensor fusion. This method uses independent network backbones and sensor heads to predict objects for each sensor individually. Sensor fusion then receives these object lists and fuses the individual objects to create one unified list containing the fused objects of all sensors.

One drawback of this fusion strategy is the late combination of information. If two or more sensors only have partial information on a certain object, that object might not be detected by any sensor. However, if the partial information is already combined on feature level and the combined features are provided to an object detection head, it’s likely that the objected will be detected. This is exactly what AI-based sensor fusion does.

Object-level sensor fusion predicts objects for each sensor individually and fuses them to create one unified list.

Object-level sensor fusion predicts objects for each sensor individually and fuses them to create one unified list.

What is AI-based sensor fusion?

Instead of fusing object lists from different sensors, AI-based sensor fusion combines information at the feature level, and predictions are made only once and on the basis of all available information – not for each sensor individually.

The foundation of AI-based low level sensor fusion are feature maps of the individual sensors, which are extracted by independent network backbones. Spatial fusion transforms these feature maps into a unified space and fuses them together. The result is a unified representation of the vehicle’s environment, containing the information of all sensors. A commonly used space is the bird’s eye view.

On top of this, temporal fusion incorporates time as an additional source of information. It exploits the output of the spatial fusion from previous time steps, fusing current and past information on feature level to improve the feature quality and compensate for missing features. The key component is a temporal memory, which aggregates and transports information over and through time.

The result of the spatial and temporal fusion steps is a high-quality, multimodal feature map in a unified 3D space. Based on the feature map, the heads, which define the environment model, compute the final predictions. Since an environment model for automated driving must provide a variety of information about the environment, so we use a multi-head architecture. Each individual head is trained for one specific task, like detecting objects, classifying road signs, or segmenting roads to detect drivable space.

AI-based sensor fusion is a more advanced approach that makes predictions only once and on the basis of all information.

AI-based sensor fusion is a more advanced approach that makes predictions only once and on the basis of all information.

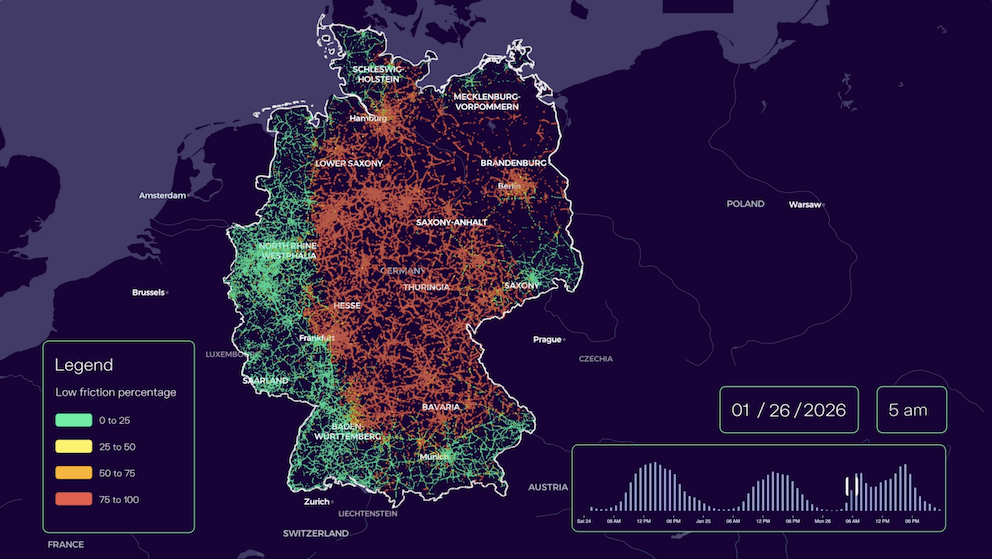

What does the car see?

Below, you can see a visual representation of AI-based sensor fusion for 3D object detection. This is what the car ‘sees’. On the left side is the camera image. The camera perception backbones calculate the camera feature maps. These maps are then transformed by a autoencoder into a bird’s eye view image (bottom-left corner). At the same time, a lidar backbone computes feature maps from point clouds and also presents them in a bird’s eye view (bottom-right corner). Since both feature maps are now in a common space, they can be fused to provide improved feature maps to the object detection head. In the middle of the image, you can see the combined results of this multimodal object detection.

Multimodal object detection is the result of various inputs, such as from cameras and lidar

Multimodal object detection is the result of various inputs, such as from cameras and lidar

Improving safety by using a variety of car sensors

Safety is our highest priority at CARIAD and especially crucial in the field of automated driving. In order to ensure the maximum safety of our customers, we need to maximize our sources of information – our sensors.

We’ve integrated a full camera belt around the car, which gathers data processed by our own in-house video perception team. Additionally, we’ve installed a radar belt as an additional source of information with a complementary measurement principle. Finally, a laser system is able to scan and measure distances to a high accuracy and resolution.

Accelerating the development of ADAS/AD functions

There are two main reasons for using AI in sensor fusion. Firstly, AI technologies allow us to scale faster. When high performance is required – as is the case in the field of ADAS/AD – the number of corner cases that have to be taken into account is higher. It’s simply too inefficient to have coders manually check every one of these cases and develop individual algorithms to solve them. This is why, in the top-right corner of the image below, you can see how the performance improvement flattens at high levels of automation.

Secondly, using AI in sensor fusion allows us to achieve higher levels of automation. The image below also illustrates this – you can see how, once data-driven development starts, the level of automation reached is higher because we can attain higher performance.

With full AI-based sensor fusion, we can reach higher levels of automated driving, i.e. greater capabilities.

With full AI-based sensor fusion, we can reach higher levels of automated driving, i.e. greater capabilities.

Of course, to support sensor fusion and advance data-driven development, we need data. In other parts of our software stack, such as camera and lidar perception, our appliance of data-driven development is already state of the art. We’re supporting this development with an intelligent data acquisition system, namely the Big Loop. The Big Loop helps us to find the value in this sea of information and make automated driving even safer and more comfortable.